Based on the opening segment of Luis Martín’s remarks at the VIII AI Congress (El Independiente Journal), this article formalizes a systems-engineering view of artificial intelligence as a lever for informational, strategic, and operational superiority.

We model risk and opportunity in adversarial settings, outline a maturity ladder from insufficiency to superiority, and distill an implementation playbook for organizations and -critically- European institutions.

The piece contrasts data-hungry machine learning with a neurocognitive approach to agent design (reasoning-centric, knowledge-first and low-data), and argues that capability building and vulnerability reduction -not intention- are the binding constraints for both security and growth.

1) Context: Hybrid conflict is the ambient environment

Martín frames Europe as operating inside a hybrid conflict (an ongoing superposition of economic, technological, and military contests). In such an environment, the tempo of decision-making and the precision of evidence aggregation dominate outcomes.

The diagnosis is blunt: Europe’s aggregate risk is high; its realized opportunity is low. The remedy, he argues, is not rhetorical alignment but engineered superiority.

2) A quantitative lens on risk and opportunity

A pragmatic, intelligence-community style decomposition is useful:

Threat: T = Adversary Intention×Adversary Capability

Risk (to us): R = T×Our Vulnerability = (Adv. Intention×Adv. Capability)×Our Vulnerability

Opportunity (for us): dominated by our intention and capability, and by how effectively we shrink our own vulnerabilities while exploiting extant openings. (Intention alone is cheap; capability and hardening are the multipliers that move real outcomes).

Operational takeaway: in adversarial domains, assume intention exists and optimize against capabilities and vulnerabilities. This mirrors U.S. national-security doctrine that treats hostile intent as a constant and concentrates on capability detection and vulnerability reduction.

3) The superiority ladder: from insufficiency to dominance

Martín describes superiority as a trajectory, not a switch. For informational capability (and, by propagation, strategic and operational capability), organizations climb a ladder:

Insufficiency → 2. Sufficiency → 3. Efficiency → 4. Resilience → 5. Advantage (transient) → 6. Dominance → 7. Superiority

Each rung has measurable gates (coverage, latency, precision, robustness, and cost per correct decision). AI systems should be deployed explicitly to raise the rung with the highest return on marginal effort.

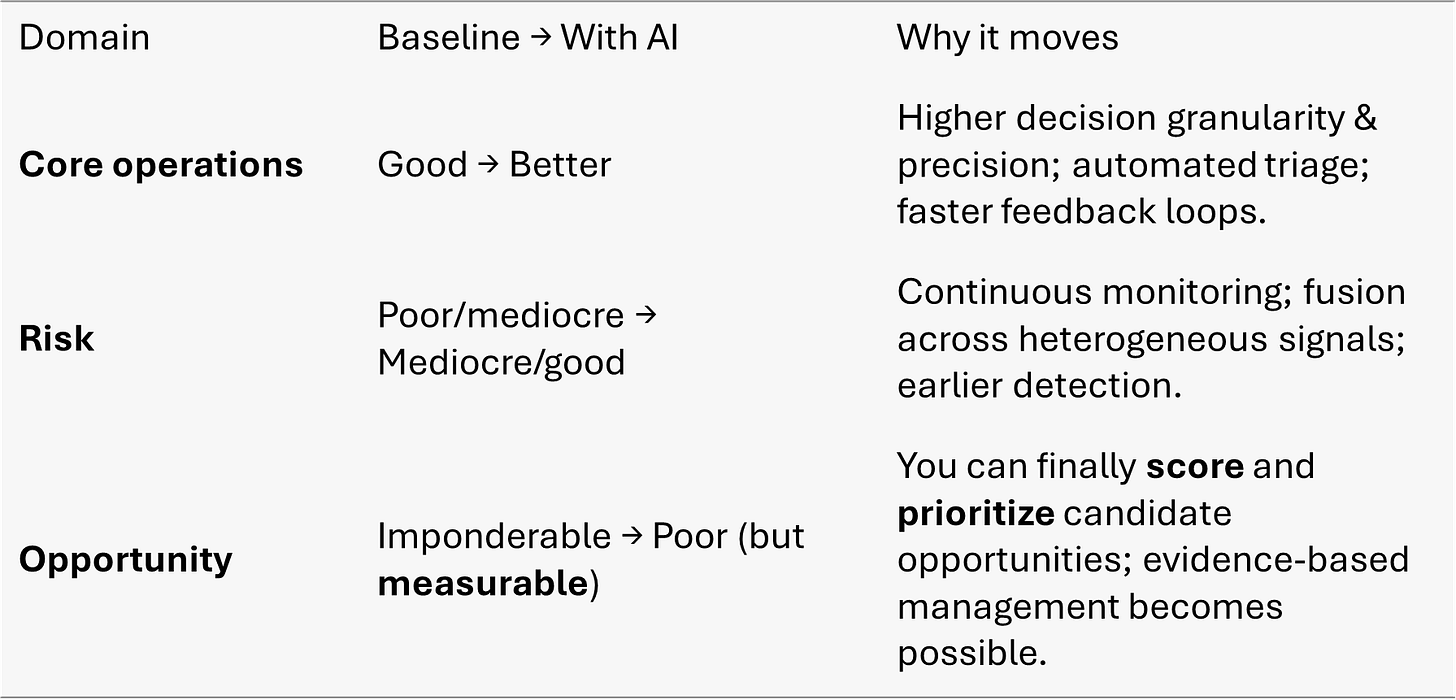

4) What AI actually changes inside an organization

Before AI, most organizations perform core operations well enough, while:

Risk management is mediocre to poor.

Opportunity sensing is imponderable (not instrumented, not scored).

When AI systems are inserted with a superiority objective, a key shift commonly occur:

The crucial inflection is not cosmetic “AI features,” but the instrumentation of uncertainty so that opportunity and risk become auditable portfolios.

5) From data-hungry ML to neurocognitive AI

Beyond scaling conventional data-centric ML, Martín highlights a complementary path:

Neurocognitive / reasoning-centric AI: engineer cognitive agents that emulate and compose human reasoning patterns. Rather than training exclusively on vast data lakes, agents operate over curated knowledge structures and explicit reasoning strategies, with adaptive learning on top.

“Zero-data” AI (ZD-AI) in this context means systems that can operate competently with minimal task-specific data, because the primary asset is structured knowledge and reasoning procedures, not statistical fitting alone.

Reasoning boxes: modularized, reusable reasoning patterns (more than 500 have been identified and modeled in Martín’s line of work) that can be composed into task-specific agents for intelligence, strategy, and operations.

A virtual intelligence analyst: a pipeline that encodes domain-expert cognition (collection planning, source evaluation, hypothesis generation, red-teaming, and structured analytic techniques) to produce mid-complexity intelligence reports in seconds—turning weeks of analyst time into machine-assisted minutes. Human oversight remains essential for sense-making and accountability.

This approach is attractive in mission-critical, sensitive environments (defense, finance, energy) where data are sparse, siloed, or classified, and where explainability and control over inference steps are as important as accuracy.

6) Multidomain by design

Security, defense, and conflict are not just kinetic. They propagate across:

Military (land/sea/air/cyber/space),

Criminal & terrorist networks,

Corporate & financial systems,

Energy & environmental infrastructure.

A credible architecture treats these as coupled layers, because financial, information, and energy constraints collapse operational freedom long before kinetic thresholds are reached.

7) Europe’s capability gap is organizational (then technical)

The talk underscores several European bottlenecks:

Capability concentration: too few firms with deep, long-horizon R&D in mission-grade AI; an order of magnitude below what a continent-scale security posture requires.

Talent economics: compensation bands and risk capital often fail to clear for top-end AI engineering and autonomous decision-making expertise—inviting predictable talent flight.

Procurement friction: strategic industries behaving like cartels is incompatible with the tempo and openness needed to field frontier systems.

Dependency risk: serially importing compute, platforms, and doctrine from abroad hard-wires strategic fragility.

8) An engineering agenda for European AI superiority

A practical, near-term plan, consistent with Martín’s thesis, could look like this:

Define superiority targets and metrics

Publish target rungs for information, strategy, operations by sector.

Make latency, coverage, fusion quality, decision precision, and cost per correct decision first-class KPIs.

Field neurocognitive agent platforms

Build an open, audited engineering stack for cognitive agents: knowledge representation, composition of reasoning boxes, verification & validation harnesses, human-on-the-loop controls.

Instrument opportunity and risk portfolios

Stand up continuous evidence aggregation pipelines; score risk vectors and opportunity hypotheses; enforce portfolio-style governance (entry/exit, sizing, stopping rules).

Invest in resilience before scale

Prioritize robustness to adversarial conditions (data denial, deception, model drift) and fail-safe behaviors over pure throughput.

Create 100+ deep-R&D “spikes”

Seed and protect mission-grade labs (public, private, joint) with multi-year mandates across defense, finance, energy, and health. Aim for capability pluralism (not monocultures of one vendor or paradigm).

Talent and compensation realignment

Pay market-clearing rates for scarce skills (autonomous decision systems, safety engineering, secure deployment). Couple with mobility across public and private roles.

Procurement as a flywheel

Shift from prescriptive specs to outcome-based tenders with rapid iteration cycles, red-team milestones, and mandatory interoperability tests.

9) Governance and safety notes

Human accountability: cognitive agents must operate with observable chains of reasoning, audit trails, and reversible decisions.

Dual-use management: embed policy governors and mission constraints at design time; log and review consequential actions.

Data minimization: ZD-AI’s knowledge-first stance is a privacy ally, but rigorous access control and information-hazard review remain mandatory.

10) Conclusion: Capability, not rhetoric

If intention is ubiquitous, capability and vulnerability determine outcomes. Superiority is earned via engineered advances in informational throughput, strategic coherence, and operational precision (measured, audited, and iterated). Europe can still choose that path: build the agent platforms, fund the spikes, instrument uncertainty and pay for the talent. Everything else is commentary.